DeepSeek V3.1 vs GPT-5 vs Claude 4.1: AI Model Comparison

Artificial intelligence in 2025 is no longer just about building smarter chatbots. It has become the foundation for startups, enterprises, and governments worldwide. At the center of this revolution stand three powerful models: DeepSeek V3.1, GPT-5, and Claude 4.1.

OpenAI and Anthropic dominate Western markets with GPT-5 and Claude 4.1, while DeepSeek V3.1 has emerged as a serious challenger from China. What makes this comparison fascinating is not just performance but the philosophy behind each model — cost, openness, safety, and global positioning.

This article takes a deep look at DeepSeek V3.1 vs GPT-5 vs Claude 4.1, breaking down their strengths, weaknesses, and real-world impact so you can choose the right AI for your needs.

In this article, we’ll go beyond technical jargon. We’ll explore what each model offers, where they shine, and most importantly, which one makes sense for your business or personal use case.

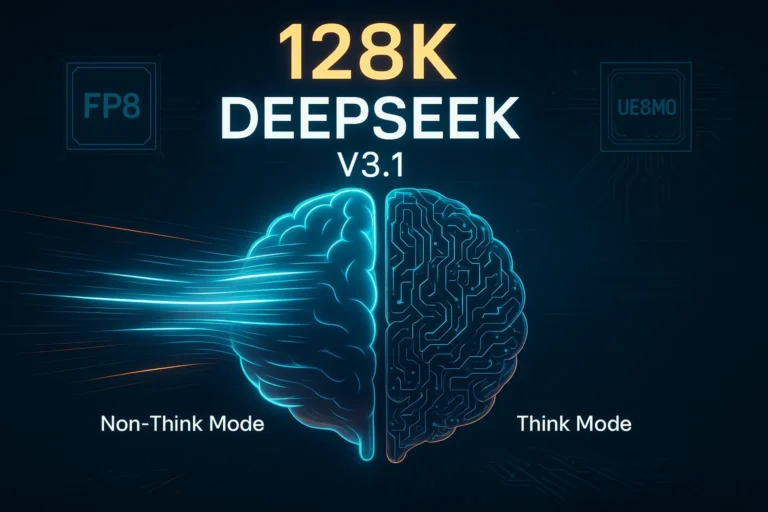

1. Understanding DeepSeek V3.1

DeepSeek V3.1 is not just an incremental update; it represents a new direction in AI development. Built under China’s AI-first strategy, DeepSeek aims to provide a high-performance, low-cost, and open-source alternative to Western models.

One of its standout features is its 128K token context window, which allows users to feed massive documents or long conversations without losing coherence. This makes DeepSeek V3.1 particularly strong in scenarios such as research analysis, legal review, or knowledge management systems.

Another innovation is hybrid inference, which intelligently switches between lightweight and heavy reasoning modes depending on the task. This means the model can respond faster and use fewer resources when the query is simple but still deliver deep reasoning for complex prompts.

👉 If you’re a startup building a knowledge management tool, DeepSeek V3.1 offers the perfect mix of long memory, open licensing, and low cost.

2. GPT-5: The Enterprise Powerhouse in AI Benchmarks 2025

When looking at DeepSeek V3.1 vs GPT-5 in an AI model comparison, GPT-5 stands out as OpenAI’s flagship enterprise solution. As the successor to GPT-4, GPT-5 is built for scale, featuring a massive 272K context window — ideal for handling complex datasets, legal contracts, or entire technical manuals in a single query.

What makes GPT-5 unique is its deep integration with Microsoft’s ecosystem. From Copilot in Office to seamless deployment on Azure APIs, it offers enterprises a plug-and-play experience that DeepSeek has not yet matched at this scale. Its multimodal capabilities (text, image, and audio) further expand its versatility, giving businesses more flexibility in how they apply AI.

In the context of AI benchmarks 2025, GPT-5 may not be the cheapest, but it consistently delivers on reliability, compliance, and scalability. Backed by OpenAI’s governance framework and global infrastructure, it remains the safer choice for Fortune 500 companies or any business handling sensitive data.

👉 If compliance, data security, and enterprise-grade reliability are your top priorities, GPT-5 wins in this AI model comparison — even if it comes at a higher cost.

Claude 4.1: The Safety-First AI Model in 2025

In the ongoing AI model comparison of DeepSeek V3.1 vs GPT-5 vs Claude 4.1, Anthropic’s Claude 4.1 takes a unique position by prioritizing safety and ethical AI. Built on the principles of Constitutional AI, Claude is designed to minimize harmful outputs, making it one of the most trusted models in regulated industries.

While it doesn’t compete with GPT-5’s massive 272K context window or DeepSeek’s unmatched cost efficiency, Claude offers something equally valuable: trust and reliability. With a context window close to 200K tokens, it performs strongly in handling long documents while focusing on transparency and interpretability rather than raw speed.

In the context of AI benchmarks 2025, Claude 4.1 often scores higher on reasoning and ethical alignment tests. This makes it a preferred option for sectors like healthcare, government, and finance, where safety and compliance outweigh performance or cost.

👉 If your organization operates in high-stakes environments where accuracy, safety, and ethical AI matter most, Claude 4.1 is the clear winner in this AI model comparison.

4. How They Compare on Core Features

When we compare the three models side by side, the differences become clear. DeepSeek V3.1 wins in terms of cost efficiency and openness thanks to its MIT license, making it the most developer-friendly option. GPT-5 dominates with its massive context window, multimodal abilities, and enterprise ecosystem. Claude 4.1 stands out with its ethical framework and careful reasoning, making it the most “trustworthy” of the three.

DeepSeek V3.1 vs GPT-5 vs Claude 4.1:

| Feature | DeepSeek V3.1 | GPT-5 (OpenAI) | Claude 4.1 (Anthropic) |

|---|---|---|---|

| Context Window | 128K | 272K | ~200K |

| License | MIT (Open-source) | Proprietary | Proprietary |

| Cost Efficiency | 98% cheaper | High | Moderate |

| Ecosystem | Community-driven | Microsoft ecosystem | Safety-focused clients |

| Best For | Startups, researchers | Enterprises, developers | Safety-critical fields |

These trade-offs highlight a simple truth: the “best” model depends entirely on who you are and what you need.

5. The Cost Efficiency Battle

Cost is one of the most overlooked factors when businesses choose an AI model. Many assume that performance benchmarks should guide the decision, but in practice, pricing determines scalability.

Here, DeepSeek V3.1 benchmarks show an undeniable advantage. It delivers results at up to 98% lower cost than GPT-5, largely because of its optimized architecture and hybrid inference. For startups or research labs with limited budgets, this can be the difference between deploying AI widely or not at all.

GPT-5, by contrast, is the most expensive option. It justifies this with enterprise-level reliability, but the pricing can be prohibitive for small to mid-sized businesses. Claude 4.1 sits in the middle, offering reasonable cost but nowhere near the savings that DeepSeek provides.

👉 For bootstrapped startups, DeepSeek is the clear winner in cost efficiency.

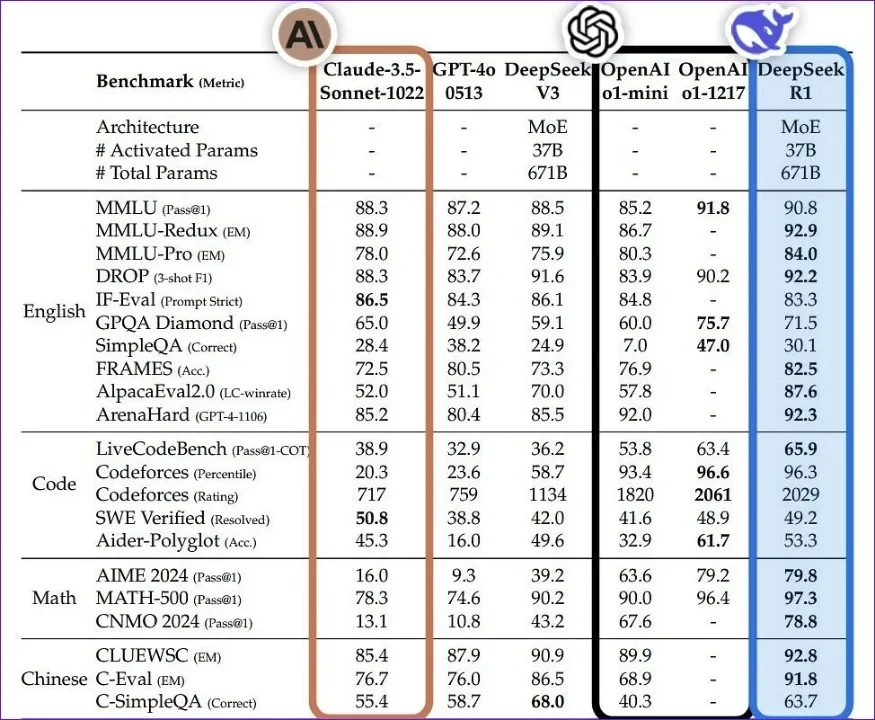

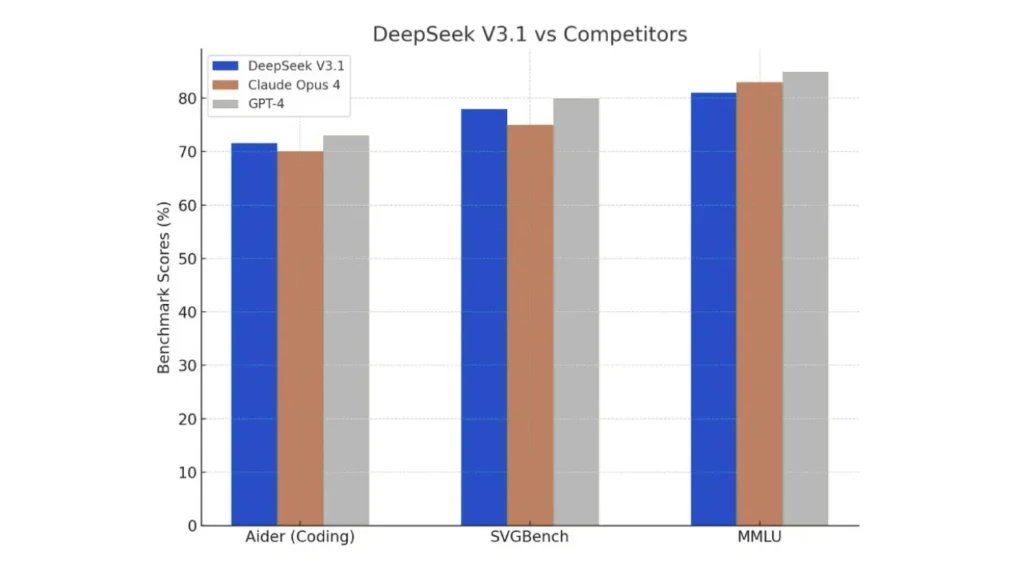

6. Benchmark Performance Analysis

Performance benchmarks show each model’s strengths in different domains.

On coding and software engineering tasks, both GPT-5 and DeepSeek V3.1 outperform most open-source rivals. DeepSeek’s hybrid inference gives it a unique edge in long, context-heavy programming scenarios. On reasoning tasks, Claude 4.1 often scores higher because of its cautious and step-by-step reasoning style, making it less likely to hallucinate than its competitors.

For long-context tasks, GPT-5 holds the lead with its 272K context window, but DeepSeek’s 128K context is more than enough for most use cases, particularly when paired with its cost efficiency.

The takeaway? Benchmarks tell part of the story, but the real-world value depends on use cases, not just test scores.

7. Contextual Use Cases: Who Should Choose What?

- DeepSeek V3.1 is best for startups, research labs, and developers who need affordability and openness. Imagine a small edtech startup building a personalized tutoring system — DeepSeek lets them scale without burning through their budget.

- GPT-5 suits large enterprises with compliance needs, such as multinational banks or law firms. These organizations care less about cost and more about scalability, integration, and regulatory trust.

- Claude 4.1 is the right fit for high-stakes industries like healthcare or government, where the priority is ensuring that AI makes safe, ethical, and explainable decisions.

👉 Instead of asking “Which is best overall?”, the smarter question is: “Which is best for my context?”

Read More: Is DeepSeek Safe To Use?

8. Expert Insights and Predictions

Looking ahead, analysts suggest that DeepSeek could disrupt the AI market globally if adoption outside China increases. Its open-source MIT license and low cost could shift the balance away from heavily commercialized models like GPT-5.

However, challenges remain. DeepSeek still needs stronger community adoption and developer trust outside of China. Infrastructure support is another concern — while GPT-5 runs smoothly on Azure, DeepSeek depends more on localized hardware optimization.

The geopolitical angle cannot be ignored either. DeepSeek V3.1 represents China’s push for AI independence, a move that has already influenced global semiconductor and AI policy.

💡 My perspective: Many businesses make the mistake of choosing a model based only on benchmarks without considering integration costs, ecosystem support, or long-term flexibility. These often matter more than raw performance.

9. Final Verdict

There is no single winner in the battle between DeepSeek V3.1, GPT-5, and Claude 4.1.

- Choose DeepSeek V3.1 if you value cost efficiency, open-source flexibility, and accessibility.

- Choose GPT-5 if you are an enterprise needing compliance, multimodality, and scalability.

- Choose Claude 4.1 if you prioritize safety, ethics, and trustworthiness above all else.

The real question isn’t “Which is the most powerful model?” but rather “Which model aligns with your goals and resources?”

Conclusion

The AI model comparison in 2025 highlights more than just performance — it underscores the balance between affordability, openness, reliability, and ethical responsibility. DeepSeek V3.1 shows that a cost-effective, open approach can disrupt the field, GPT-5 stands strong as the enterprise leader, and Claude 4.1 sets the bar for safe and responsible AI. Ultimately, the best model depends on your unique goals, making the decision a matter of alignment rather than a one-size-fits-all choice.