In August 2025, Chinese AI startup DeepSeek launched its latest breakthrough, DeepSeek V3.1, marking a compelling evolution in AI models. What makes this release stand out is its Hybrid Inference Architecture, which introduces two modes—reasoning and non-reasoning—allowing users to toggle between fast responses and deep, reasoning-based outputs. This flexibility is accessible through the new DeepThink button in the company’s official app and web platform, enabling users to instantly switch modes and experience enhanced performance.

DeepSeek V3.1 also pushes the boundaries of capability with an extended context length of up to 128K tokens, making it a powerful contender against Western AI models. With its unique combination of speed, reasoning, and scalability, V3.1 positions DeepSeek as a formidable player in the global AI race.

Model Update — DeepSeek V3.1 Highlights

🔹 V3.1 Base: Built on top of V3 with 840B tokens of continued pretraining for long-context extension.

🔹 Tokenizer & Chat Template Updated: Check the new tokenizer configuration.

🔗 Open-source Weights:

Read More : Best DeepSeek AI Alternatives In 2025 – Should You Switch?

Technical Deep Dive

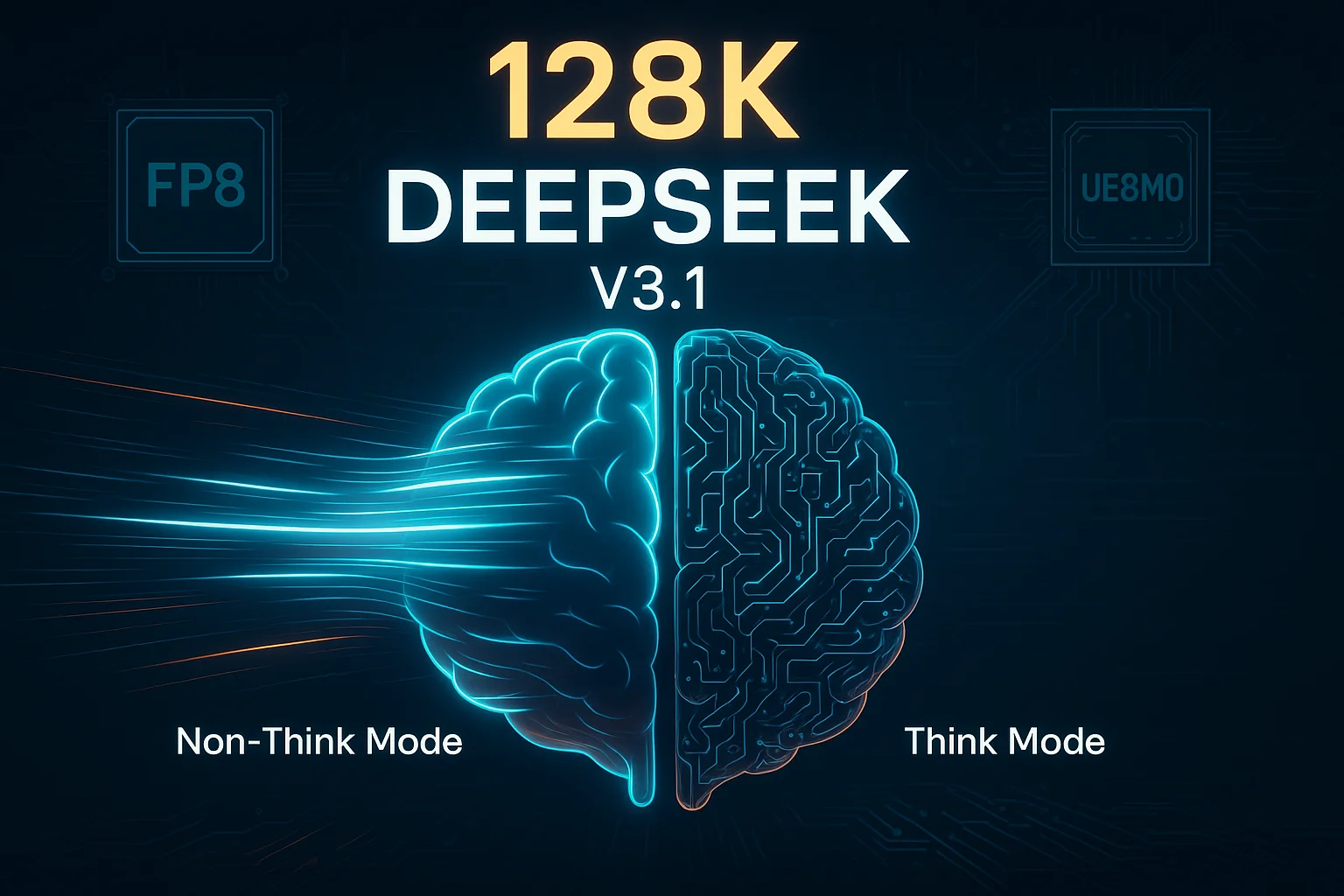

1. Hybrid Inference Architecture: Think vs. Non-Think Modes

One of the most revolutionary features in DeepSeek V3.1 is its Hybrid Inference Architecture, which allows users to toggle between two distinct processing modes:

- Non-Think Mode: Designed for speed and efficiency, this mode delivers fast, high-quality responses without engaging in deep reasoning. Ideal for quick queries and real-time applications where latency matters.

- Think Mode: When a task requires complex reasoning or problem-solving, Think Mode activates deeper computation layers, enabling structured logical outputs and improved accuracy for advanced scenarios such as coding, analysis, and research.

This dual-mode design strikes a perfect balance between performance and cognitive depth, making V3.1 adaptable for both consumer-facing apps and enterprise-level workloads.

2. Extended Context Length: Up to 128K Tokens

DeepSeek V3.1 dramatically improves its ability to handle and retain large volumes of contextual data. With a context window of up to 128,000 tokens, it can process entire books, multi-part technical documents, or long-form conversations without losing coherence.

This capability is powered by two-phase long-context training combined with adaptive attention optimization, ensuring that even with massive inputs, the model maintains precision and logical consistency.

3. Hardware Optimization: FP8 and UE8M0 Data Types

To achieve faster inference and lower energy consumption, V3.1 introduces support for FP8 precision and UE8M0 data type, which aligns with next-generation hardware and emerging domestic chip architectures.

These optimizations not only enhance compatibility with advanced accelerators but also position DeepSeek as a key player in the AI hardware-software co-optimization movement, reducing dependency on traditional Western GPU ecosystems.

DeepSeek V3.1 Benchmarks & Performance

The DeepSeek V3.1 benchmarks reveal that this update isn’t just a feature upgrade—it’s a performance powerhouse. Compared to its predecessor and competing models, V3.1 sets new standards in reasoning, coding, and multi-task performance. Here’s what stands out:

- Significant Benchmark Gains

On SWE-bench (software engineering tasks) and Terminal-bench (command-line reasoning), V3.1 demonstrates a 40%+ improvement over V3 and earlier R1 variants.

It also outperforms open-source alternatives and rivals like OpenAI’s gpt-oss-20b, particularly in logical reasoning, fiction writing, and code generation, as noted in recent independent reviews.

2. Hybrid Mode Impact

- Think Mode consistently scores higher accuracy on reasoning-heavy benchmarks without a major trade-off in latency, thanks to optimized inference pathways.

- Non-Think Mode remains competitive in speed benchmarks, ensuring responsiveness for consumer apps and real-time chat use cases.

3. Long-Context Superiority

With the DeepSeek V3.1 context window extending up to 128K tokens, the model dominates long-context benchmarks:

- Excels in multi-document Q&A, long-chain reasoning, and context-dependent programming tasks.

- Maintains semantic coherence and factual accuracy across extensive prompts—where many models degrade.

4. Energy & Hardware Efficiency

Due to FP8 and UE8M0 precision support, V3.1 runs more efficiently on next-gen accelerators and domestic chips, reducing inference costs while sustaining state-of-the-art accuracy.

Market Impact: Boosting Chinese Semiconductor Stocks

The release of DeepSeek V3.1 had an immediate ripple effect beyond the AI industry—its hardware compatibility strategy significantly impacted the Chinese semiconductor market. Unlike many Western models optimized primarily for NVIDIA GPUs, V3.1 was trained with FP8 and UE8M0 data types, making it highly compatible with domestically developed AI accelerators.

This alignment with local chip architectures is more than a technical detail—it’s a strategic move that positions DeepSeek as a champion of China’s technological self-reliance. Following the announcement, major Chinese semiconductor companies, including Cambricon Technologies, saw stock prices surge by nearly 20%. Analysts attribute this spike to growing investor confidence in the viability of a domestic AI ecosystem, reducing dependence on Western hardware.

In essence, DeepSeek V3.1 is not just an AI milestone—it’s a geopolitical statement, signaling China’s commitment to building a fully localized AI stack from chips to software, potentially reshaping the global semiconductor landscape.

Conclusion

The launch of DeepSeek V3.1 is more than an incremental upgrade—it represents a strategic and technological breakthrough in the global AI race. With its Hybrid Inference Architecture, massive 128K tokens context window, and hardware optimization for domestic chips, V3.1 positions DeepSeek as a serious contender against Western AI giants.

Its impact goes beyond the DeepSeek V3.1 benchmarks; it has influenced financial markets, boosted confidence in China’s semiconductor industry, and reinforced the vision of an independent AI ecosystem. By making its weights openly available and introducing developer-friendly features, DeepSeek has also embraced open innovation, ensuring rapid community adoption and real-world applications.

As the world watches, DeepSeek V3.1 is not just shaping AI technology—it’s reshaping the balance of power in global innovation. The next question is: how will competitors respond, and what does this mean for the future of AI leadership?